山东城市建设招生网站好推建站

项目要点

- Fashion-MNIST总共有十个类别的图像。

- 代码运行位置 CPU: cpu=tf.config.set_visible_devices(tf.config.list_physical_devices("CPU"))

- fashion_mnist = keras.datasets.fashion_mnist # fashion_mnist 数据导入

- 训练数据和测试数据拆分: x_valid, x_train = x_train_all[:5000], x_train_all[5000:]

- x_train_scaled = scaler.fit_transform(x_train.astype(np.float32).reshape(55000, -1)).reshape( -1, 28, 28, 1) 标准化处理数据 # scaler = StandardScaler() 标准化处理只能处理一维数据

- 创建模型: model = keras.models.Sequential()

- model.add(keras.layers.Conv2D(filters = 64, kernel_size = 3, padding = 'same', activation = 'relu', input_shape = (28, 28, 1))) 添加输入层

- 池化, 常用最大值池化: model.add(keras.layers.MaxPool2D())

- model.add(keras.layers.Conv2D(filters = 32,kernel_size = 3, padding = 'same',activation = 'relu')) # 添加卷积层

- 维度变化, 卷积完后为四维, 自动变二维: model.add(keras.layers.Flatten())

- model.add(keras.layers.Dense(512, activation = 'relu', input_shape = (784))) # 重新调整形状

- 添加卷积层: model.add(keras.layers.Dense(256, activation = 'relu'))

- 添加输出层: model.add(keras.layers.Dense(10, activation = 'softmax'))

- 查看模型: model.summary()

- 模型配置:

model.compile(loss = 'sparse_categorical_crossentropy',optimizer = 'adam',metrics = ['accuracy'])- histroy = model.fit(x_train_scaled, y_train, epochs = 10, validation_data= (x_valid_scaled, y_valid)) 模型训练

- 模型评估: model.evaluate(x_test_scaled, y_test)

- 画图大小设置: pd.DateFrame(history.history).plot(figsize = (8, 5))

- 网格线显示: plt.grid(True)

- y轴设置: plt.gca().set_ylim(0, 1) # plt.gca() 坐标轴设置

- plt.show() 显示图像

一 Fashion-MNIST分类

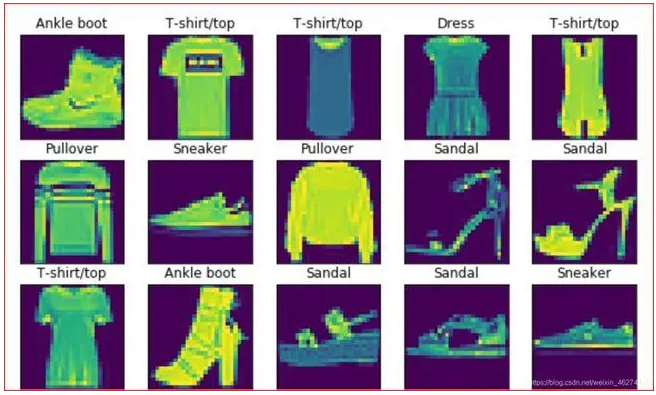

Fashion-MNIST总共有十个类别的图像。每一个类别由训练数据集6000张图像和测试数据集1000张图像。所以训练集和测试集分别包含60000张和10000张。测试训练集用于评估模型的性能。

每一个输入图像的高度和宽度均为28像素。数据集由灰度图像组成。Fashion-MNIST,中包含十个类别,分别是t-shirt,trouser,pillover,dress,coat,sandal,shirt,sneaker,bag,ankle boot。

1.1 导包

import numpy as np

from tensorflow import keras

import tensorflow as tf

import pandas as pd

import os

import matplotlib.pyplot as plt

from sklearn.preprocessing import StandardScalercpu=tf.config.list_physical_devices("CPU")

tf.config.set_visible_devices(cpu)

print(tf.config.list_logical_devices())1.2 数据导入

fashion_mnist = keras.datasets.fashion_mnist

(x_train_all, y_train_all), (x_test, y_test) = fashion_mnist.load_data()

x_valid, x_train = x_train_all[:5000], x_train_all[5000:]

y_valid, y_train = y_train_all[:5000], y_train_all[5000:]1.3 标准化

scaler = StandardScaler()

x_train_scaled = scaler.fit_transform(x_train.astype(np.float32).reshape(55000, -1)).reshape(-1, 28, 28, 1)

x_valid_scaled = scaler.transform(x_valid.astype(np.float32).reshape(5000, -1)).reshape(-1, 28, 28, 1)

x_test_scaled = scaler.transform(x_test.astype(np.float32).reshape(10000, -1)).reshape(-1, 28, 28, 1)1.4 创建模型

model = keras.models.Sequential()

# filters 过滤器

# 卷积

model.add(keras.layers.Conv2D(filters = 64,kernel_size = 3,padding = 'same',activation = 'relu',# batch_size, height, width, channels(通道数)input_shape = (28, 28, 1))) # (28, 28, 32)

# 池化, 常用最大值池化

model.add(keras.layers.MaxPool2D()) # (14, 14, 32)# 卷积

model.add(keras.layers.Conv2D(filters = 32,kernel_size = 3,padding = 'same',activation = 'relu')) # (14, 14, 64)

# 池化, 常用最大值池化

model.add(keras.layers.MaxPool2D()) # (7, 7, 64)# 卷积

model.add(keras.layers.Conv2D(filters = 32,kernel_size = 3,padding = 'same',activation = 'relu')) # (7, 7, 128)

# 池化, 常用最大值池化

model.add(keras.layers.MaxPool2D()) # (4, 4, 128)

# 维度变化, 卷积完后为四维, 自动变二维

model.add(keras.layers.Flatten())model.add(keras.layers.Dense(512, activation = 'relu', input_shape = (784, )))

model.add(keras.layers.Dense(256, activation = 'relu'))

model.add(keras.layers.Dense(10, activation = 'softmax'))model.compile(loss = 'sparse_categorical_crossentropy',optimizer = 'adam',metrics = ['accuracy'])

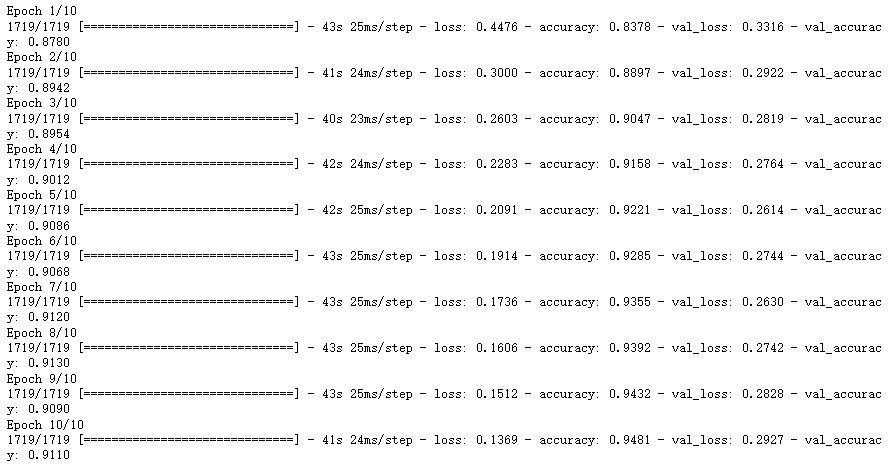

1.5 训练模型

histroy = model.fit(x_train_scaled, y_train, epochs = 10, validation_data= (x_valid_scaled, y_valid))

1.6 模型评估

model.evaluate(x_test_scaled, y_test) # [0.32453039288520813, 0.906000018119812]二 增加卷积

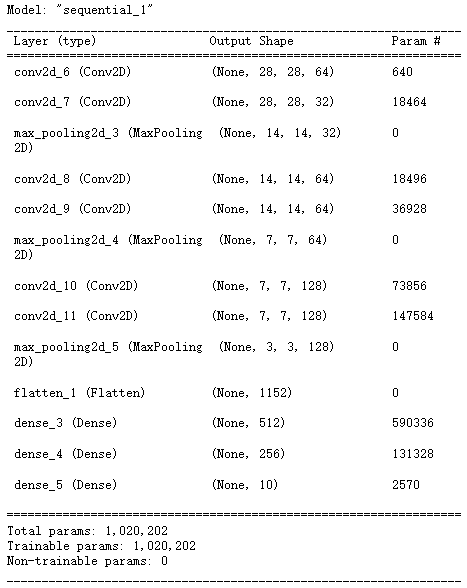

2.1 创建模型

model = keras.models.Sequential()

# filters 过滤器

# 卷积

model.add(keras.layers.Conv2D(filters = 64,kernel_size = 3,padding = 'same',activation = 'relu',# batch_size, height, width, channels(通道数)input_shape = (28, 28, 1))) # (28, 28, 32)

model.add(keras.layers.Conv2D(filters = 32,kernel_size = 3,padding = 'same',activation = 'relu')) # (14, 14, 64)

# 池化, 常用最大值池化

model.add(keras.layers.MaxPool2D()) # (14, 14, 32)# 卷积

model.add(keras.layers.Conv2D(filters = 64,kernel_size = 3,padding = 'same',activation = 'relu')) # (14, 14, 64)

model.add(keras.layers.Conv2D(filters = 64,kernel_size = 3,padding = 'same',activation = 'relu')) # (14, 14, 64)

# 池化, 常用最大值池化

model.add(keras.layers.MaxPool2D()) # (7, 7, 64)# 卷积

model.add(keras.layers.Conv2D(filters = 128,kernel_size = 3,padding = 'same',activation = 'relu')) # (7, 7, 128)

model.add(keras.layers.Conv2D(filters = 128,kernel_size = 3,padding = 'same',activation = 'relu')) # (14, 14, 64)

# 池化, 常用最大值池化

model.add(keras.layers.MaxPool2D()) # (4, 4, 128)

# 维度变化, 卷积完后为四维, 自动变二维

model.add(keras.layers.Flatten())model.add(keras.layers.Dense(512, activation = 'relu', input_shape = (784, )))

model.add(keras.layers.Dense(256, activation = 'relu'))

model.add(keras.layers.Dense(10, activation = 'softmax'))model.compile(loss = 'sparse_categorical_crossentropy',optimizer = 'adam',metrics = ['accuracy'])

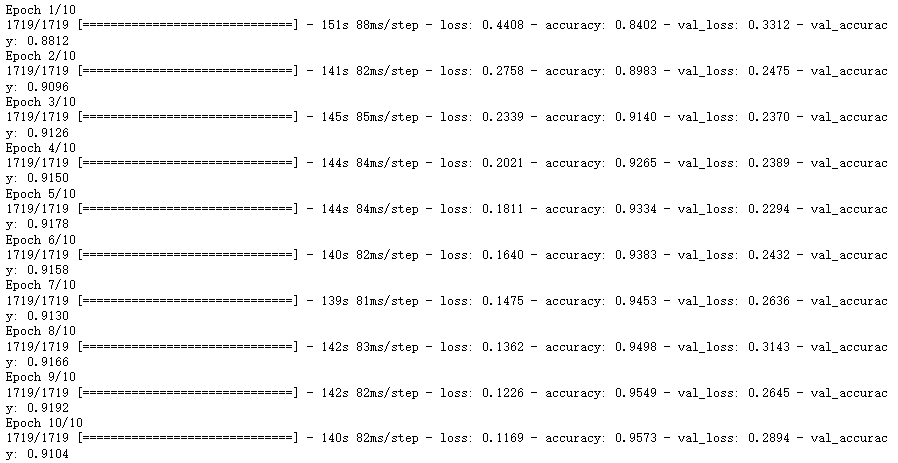

2.2 训练模型

histroy = model.fit(x_train_scaled, y_train, epochs = 10, validation_data= (x_valid_scaled, y_valid))

2.3 评估模型

model.evaluate(x_test_scaled, y_test) # [0.3228122293949127, 0.9052000045776367]